The following is part 1 of a 3-part series on "Big Data."

By Bahar Gidwani

“Big Data” should be a useful tool for rating corporate social responsibility (CSR) and sustainability performance. It may be the answer to dealing with the rise in new ratings systems (it seems there is a new one announced each month) and with the disparities in scores that occur among these different systems.

In 2001, Doug Laney (currently an analyst for Gartner), foresaw that users of data were facing problems handling the Volume of data they were gathering, the Variety of data in their systems, and the Velocity with which data elements changed. These “three Vs” are now part of most definitions of the “Big Data” area.

Ratings in the CSR space appear to be a candidate for a big data solution to its three “V” problems.

- Volume: There are many sources of ratings. CSRHub currently tracks more than 175 sources of CSR information and plans to add at least another 30 sources over the next six months. Our system already contains more than 13,000,000 pieces of data from these sources that touch more than 80,000 companies. We hope eventually to expand our coverage to include several million companies.

- Variety: Each of these 175 sources uses different criteria to measure corporate sustainability and social performance. A number of comprehensive sustainability measurement approaches have been created. Unfortunately, each new entrant into the area seems compelled to create yet another system.

- Velocity: With hundreds of thousands of companies to measure and at least 175 different measurement systems, the perceived sustainability performance of companies constantly changes. Many of the available ratings systems track these changes only on a quarterly or annual basis.

Most systems for measuring the CSR and sustainability performance of corporations rely on human-based analysis. A researcher selects a set of companies to study, determines the criteria he or she wishes to use to evaluate their performance, and then collects the data needed to support the study. When the researcher can’t find a required data item in a company’s sustainability report or press releases, he or she may try to contact the company to get the data.

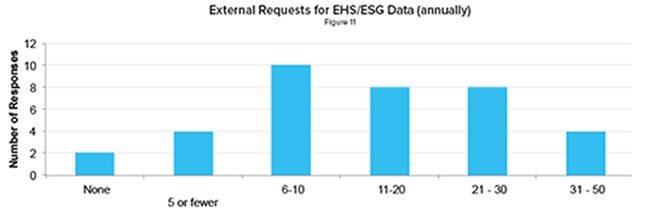

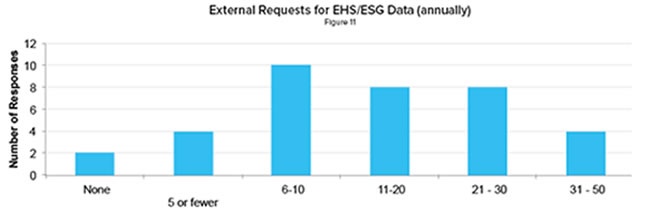

Some research firms try to streamline this process by sending out a questionnaire that covers all the things they want to know. Then, they follow up to encourage companies to answer their questions and follow up again after they receive the answers, to check the facts and be sure their questions were answered consistently. An NAEM survey showed that its members were seeing an average of more than ten of these results in 2011, and some large companies say they receive as many as 300 survey requests per year.

NAEM Green Metrics That Matter Report—2012 for 35 members.

Both the direct and survey-driven approaches to data gathering are reasonable and can lead to sound ratings and valuable insights. However, both are limited in several important ways:

- The studied companies are the primary source of the data used to evaluate them. While analysts can question and probe, they have no way to determine how accurately a company has responded.

- Different areas of a company may respond differently to analyst questions. It’s hard to determine objectively from the outside, which area of a company has the right perspective and which answer is correct.

- When companies get too many surveys and requests for data, they stop responding to them. This “survey fatigue” leads to gaps in the data collected. Note that only a few thousand large companies have full-time staff available to answer researcher questions.

- Often analysts cannot financially justify studying smaller companies. There is little interest in smaller companies from the investor clients who pay for most CSR data collection. As a result, most analyst-driven research covers a subset of the world’s 3,000 largest companies. There are only a few data sets bigger than this, and they cover only limited subject areas. There is very little coverage for private companies, public organizations, or companies based in emerging markets.

- A human-driven process will always involve a certain amount of interpretation of the data. This in turn can lead to biases that are hard to detect and remove.

- Each human-driven result is based on its own schema and therefore they are hard to compare. Companies do not understand why their rating varies from one system to the next and this reduces their confidence in all ratings systems.

It may be useful to take a look at some details of one company’s approach to a “Big Data” based analysis of CSR ratings. Our next post explains how CSRHub applies its methodology to address “Big Data” problems while also noting that every system has some limitations.

Bahar Gidwani is a Cofounder and CEO of CSRHub. Formerly, he was the CEO of New York-based Index Stock Imagery, Inc, from 1991 through its sale in 2006. He has built and run large technology-based businesses and has experience building a multi-million visitor Web site. Bahar holds a CFA, was a partner at Kidder, Peabody & Co., and worked at McKinsey & Co. Bahar has consulted to both large companies such as Citibank, GE, and Acxiom and a number of smaller software and Web-based companies. He has an MBA (Baker Scholar) from Harvard Business School and a BS in Astronomy and Physics (magna cum laude) from Amherst College. Bahar races sailboats, plays competitive bridge, and is based in New York City.

.png)